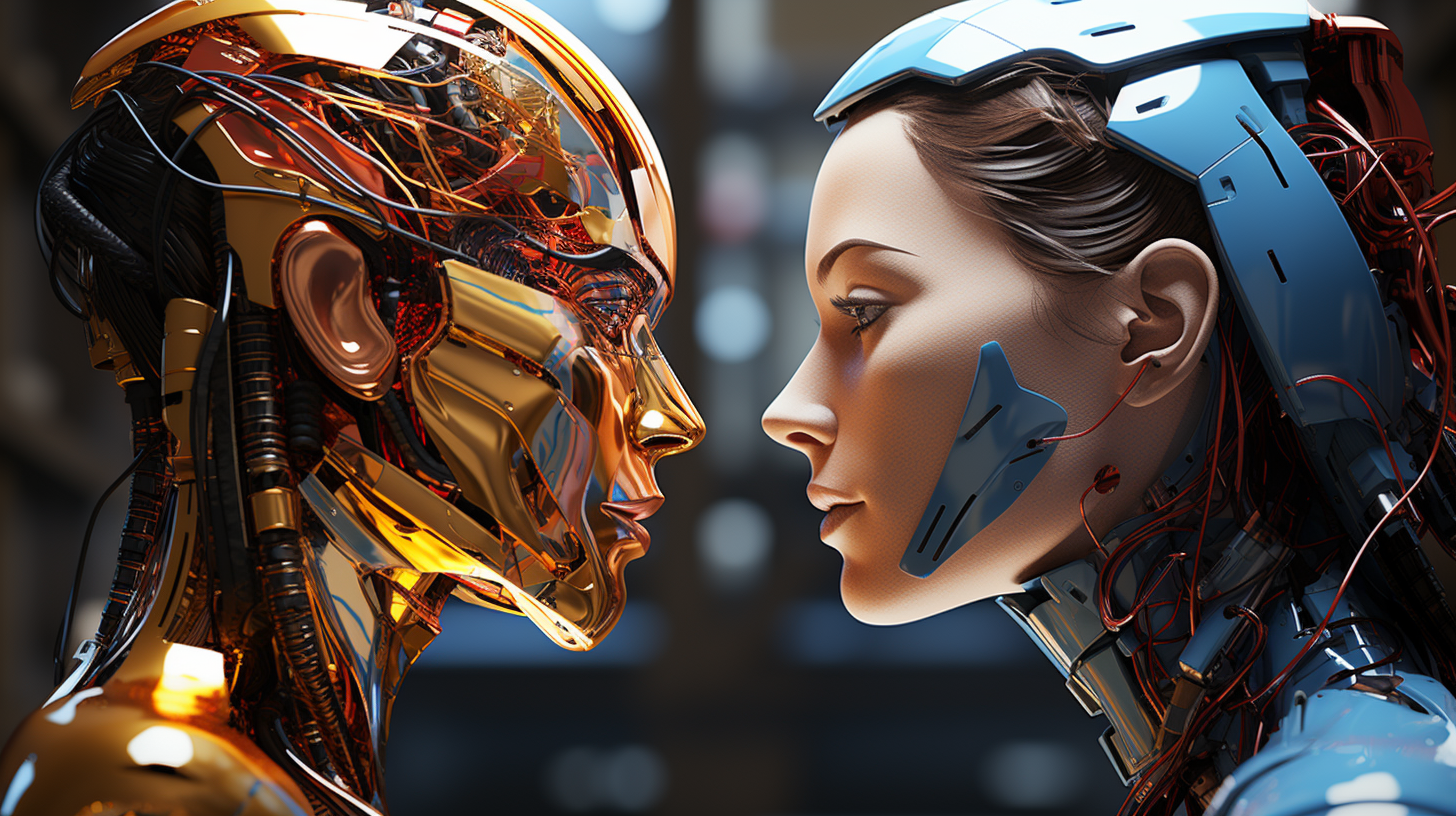

Stable Diffusion versus DALL-E: The Battle of the AI Art Gods

AI-powered image generators have been making waves in recent years, and it's no surprise why. These advanced algorithms generate unique, never-before-seen images and visuals that seemed impossible just a few years ago.

When looking for an AI image generator, it's crucial to keep several factors in mind. Firstly, consider its user-friendliness and the extent of its customizations. And is it capable of producing professional-grade images? However, finding the perfect AI solution is only half the battle. It's equally important to democratize and make it accessible to all, enabling everyone to harness its potential for personal and professional use. By making these tools accessible to a wider range of people, we can foster innovation, creativity, and progress.

And with the exponential growth of AI technology, there are countless opportunities for advancement and enrichment. So, let's continue to explore and nurture the exciting world of image generator AIs.

I have put Stable Diffusion and DALL-E to the test to see how well they work, which one is better, and how to choose your AI image generator tool.

Master the Art of Stable Diffusion: Capabilities, Limitations, and How it Works

Stable Diffusion is an open source AI image generator model created by Stability AI. Its main function is to produce high-quality photos using prompts. It's a text-to-image AI tool that generates promising images and digital compositions based on a given text description.

It generally works better with descriptive text prompts that include lots of specific details, such as texture, colors and tones, and artistic styles.

Stable Diffusion's training is based on three massive datasets assembled by LAION, a non-profit whose computing time was paid for by Stability AI, the company that owns Stable Diffusion.

This makes Stable Diffusion highly effective in processing vast amounts of data and producing highly detailed images with high realism.

Stable Diffusion has its limitations when it comes to accuracy, but its ease of use and scalability make it a great tool for creative projects. Stable Diffusion is far from perfect and can sometimes produce unsatisfactory output if the text input doesn't include all the relevant information. To avoid this, users can learn to master writing text prompts for the best results.

Exploring DALL-E: Capabilities, Limitations and Inner Workings

DALL-E, which stands for "Dali and Pixar's WALL-E," is a popular AI image generator designed to create images from textual descriptions. This cool tool was created by OpenAI and is exclusively available through them (or other services using its API). It's not open source, but hey, it's still worth checking out!

DALL-E is trained on a massive dataset of images and textual descriptions, making it highly skilled in recognizing objects, colors, shapes, and backgrounds.

DALL-E has the capacity to take textual descriptions and turn them into images, similar to Stable Diffusion. It works best when given simple and straightforward descriptions, and it translates them perfectly into images. For example, if you want an image of a red chair, you can just type "red chair" into DALL-E and it will generate an appropriate image.

Battle of the AI Art Gods: Stable Diffusion vs. DALL-E!

Both Stable Diffusion and DALL-E use a technique called diffusion for image generation.

First, the image generator begins with a random field of noise and subsequently manipulates it in increments to correspond with its interpretation of the prompt. With each effort, a different set of random noises is used to develop distinct outcomes from identical prompts.

It's like staring at the sky full of clouds, finding one that vaguely looks like a unicorn, and then magically transforming it into an even more unicorn-like shape.

But which one is better?

Though both models have similar technical underpinnings, Stability AI and OpenAI have fundamentally different ideas on how their respective AI tools should function. Stable Diffusion and DALL-E were based on unique datasets, with distinct design and implementation choices made during development. In short, while both tools can achieve similar results, their outputs can be dramatically different.

Stable Diffusion versus DALL-E: type of software and ease of use

Stable Diffusion is open source and free to use, and this can be a convincing argument for many users. However, it might be more difficult to use as it includes many specific features that you need to learn.

On the other hand, DALL-E has an extremely simple interface that lets you type in your text prompt, hit enter, and enjoy the results. You have to buy credits to be able to use DALL-E, so that can be a limitation or disadvantage for some.

Stable Diffusion versus DALL-E: image results

In terms of the final results the two AIs create, the general aspect you can see upfront is that DALL-E works better with photorealistic and product images, while Stable Diffusion can add a more artsy feel to its results.

Both Stable Diffusion and DALL-E produce high quality AI-generated images, and they’re results are satisfactory.

Stable Diffusion has the ability to produce artistic images, making it a top choice for visually-driven industries like entertainment and advertising. Meanwhile, DALL-E is ideal for web design and product retouching, as it can effortlessly generate images that match specific textual descriptions.

Stable Diffusion versus DALL-E: features and control

In terms of options and features, DALL-E is limited to image generation, inpainting, and outpainting. Stable Diffusion, on the other hand, offers a few more options, including image enhancement and more control over the generative process.

You can customize the number of steps, the initial seed, and the prompt strength, and you can make a negative prompt — all within the DreamStudio web app.

Selecting the best AI image generator depends heavily on intent and personal preference. For users seeking to create artistic, customizable images from existing visual data, Stable Diffusion presents a superior option. On the other hand, if creating images according to textual descriptions is the goal, DALL-E takes the crown as the go-to choice.

Final thoughts on Stable Diffusion and DALL-E

DALL-E is all the rage when it comes to AI image generation, but if you want to save some bucks and get more usage rights, try Stable Diffusion. It's like the thrift shop version of DALL-E, but with more power and a free trial. And if you're really into it, you can even use it to create your own custom generative AI.

But remember, the user experience is just as important as the quality of the output. Both models can create some amazing, funny, and downright weird images – depending on the prompt you give them. And who knows, you might end up using a third-party app that's built on one of these two models – in which case, you won't even notice the difference!